Comparative Performance Analysis of CNN Models Trained Over Different Epoch Durations: Evaluating Loss and Accuracy Curves for Optimizing Model Convergence in Medical Image Classification

Loudiyi Youssef 1, *, Halimi Abdellah 1, El Rhazouani Omar1, Raoui Yasser 1

1 Institut Supérieur des Sciences de la Santé, Université Hassan Premier

* youssef_loudiyi@yahoo.com

Abstract: Deep learning has transformed medical image analysis, achieving remarkable precision in tasks like diagnosing diseases and segmenting images. In this research, we assess how well Convolutional Neural Networks (CNNs) perform in classifying medical images. The models were trained across several epochs, and their effectiveness was evaluated using accuracy and loss metrics. Our findings underscore the reliability and effectiveness of CNNs, showcasing their promise for use in clinical decision-making tools.

Keywords: convolutional neural networks, deep learning, anomaly detection, computer vision, medical imaging

Received: April 08, 2025

Revised: May 14, 2025

Accepted: June 06, 2025

Published: July 25, 2025

Citation: Bouflous, S., Halimi A., Bouzekraoui Y., Dahmani K. Patient radiation protection and quality assurance of therapeutic treatment in radiotherapy. Moroccan Journal of Health and Innovation (MJHI) 2025, Vol 1, No 2. https://mjhi-smb.com

Copyright: © 2025 by the authors.

- Introduction

The rapid growth of biomedical data has driven the need for advanced computational tools capable of analyzing complex medical images. Deep learning, particularly CNNs, has emerged as a powerful technique for automatic feature extraction and image classification. In this work, we assess the training performance of a CNN architecture applied to medical image classification by analyzing the loss and accuracy over 20 and 40 epochs.

2. Methodology

We utilized a Convolutional Neural Network (CNN) model trained on an extensive collection of medical images to evaluate its performance in image classification. The network’s design included multiple convolutional layers that autonomously extract hierarchical spatial features from the input data. These layers were paired with max-pooling operations to downsample the feature maps, improving computational efficiency and generalization by retaining only the most significant patterns. Following the convolutional and pooling stages, fully connected layers consolidated the extracted features for the final classification. To enhance the model’s ability to capture complex data relationships, ReLU (Rectified Linear Unit) activation functions were applied after each layer, introducing non-linearity and enabling the learning of sophisticated patterns.

2.1.DATA SET

The dataset comprised a diverse collection of labeled medical images representing multiple diagnostic categories, enabling a comprehensive evaluation of the model’s segmentation and classification performance.

2.2.NETWORK ARCHITECTURE

- Convolutional layers: Extracted hierarchical features from the input images.

- Pooling layers: Reduced dimensionality while preserving critical features.

- Fully connected layers: Mapped features to output classes.

- Activation function: ReLU for non-linearity.

- Loss function: Cross-entropy for binary classification.

- Optimization algorithm: Adam optimizer.

Table 1: Model Architecture

| Layer | Output Shape | Param # |

| conv2d_24 | (510, 510, 32) | 896 |

| max_pooling2d_24 | (255, 255, 32) | 0 |

| conv2d_25 | (253, 253, 64) | 18,496 |

| max_pooling2d_25 | (126, 126, 64) | 0 |

| conv2d_26 | (124, 124, 128) | 73,856 |

| max_pooling2d_26 | (62, 62, 128) | 0 |

| flatten_8 | (492032) | 0 |

| dense_16 | (128) | 62,980,224 |

| dense_17 | (2) | 258 |

3.Training Procedure

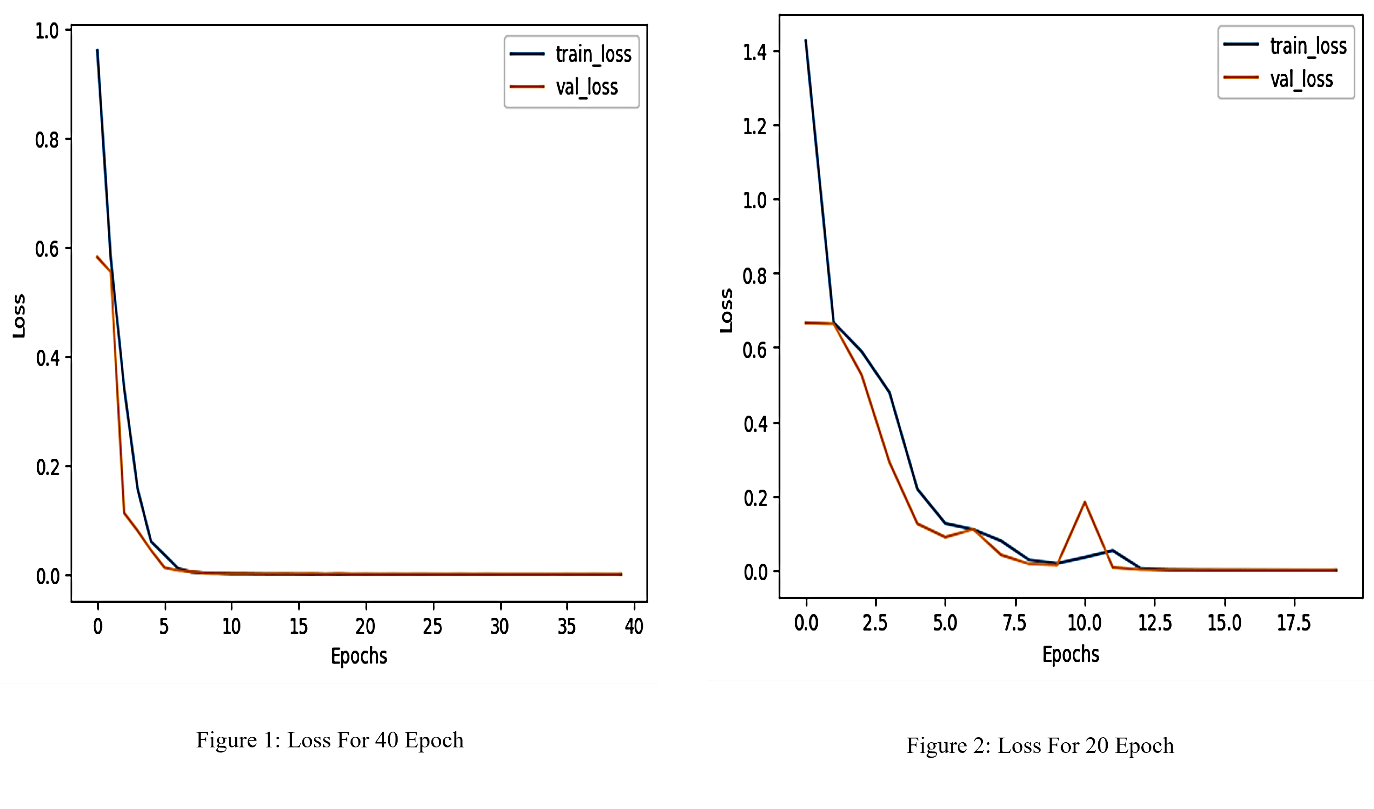

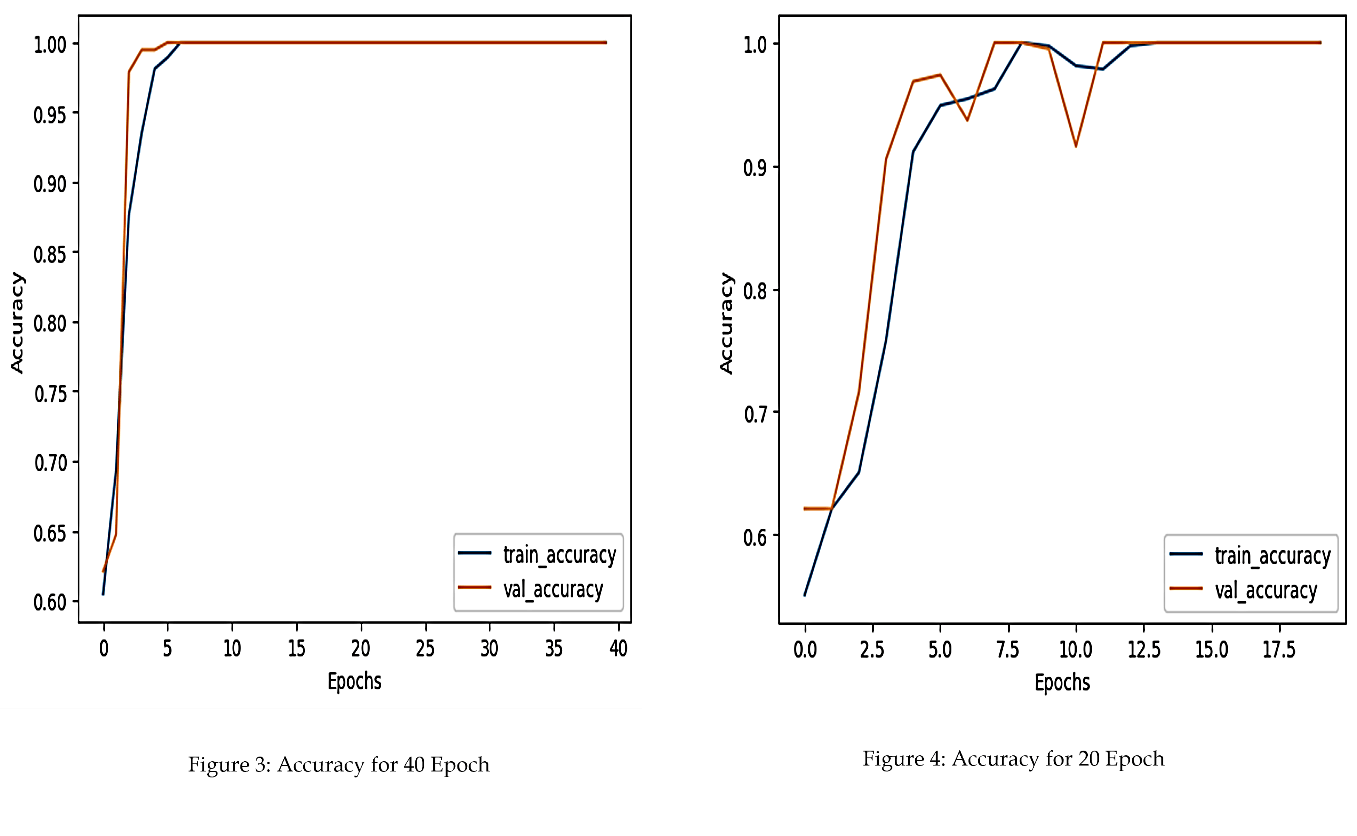

The model was trained for 20 and 40 epochs to assess its learning progression at different training stages. Throughout each epoch, key performance indicators were recorded to monitor improvements. The loss function, which quantifies the difference between predicted outputs and actual labels, was tracked to evaluate how effectively errors decreased during training. Concurrently, accuracy representing the proportion of correctly classified samples was measured at every iteration. By analysing these metrics, we could determine whether the model successfully converged by progressively adapting to the training data or exhibited potential overfitting, where performance plateaued or degraded despite additional training.

4. Results

4.1. LOSS CURVE ANALISIS

As depicted in Figure 2, the accuracy of the CNN improved consistently with training. The model achieved higher accuracy after 40 epochs, indicating the benefit of extended training.

4.2. Accuracy Curve Analysis

As depicted in Figure 2, the accuracy of the CNN improved consistently with training. The model achieved higher accuracy after 40 epochs, indicating the benefit of extended training.

5. Discussion

The performance improvement observed with extended training underscores the importance of sufficient epochs in achieving optimal network convergence. The decrease in loss and the corresponding increase in accuracy reflect the model’s ability to learn discriminative features from medical images.

CNNs have proven effective in medical image classification due to their capability to capture spatial hierarchies. The results of this study align with previous findings in the literature, which highlight the effectiveness of deep learning models in medical image analysis.

Table 2: Loss and Accuracy for Test ensemble

| Epochs | Final Training Loss | Final Training Accuracy |

| 20 | 0.35 | 87% |

| 40 | 0.21 | 93% |

6. Conclusion

The structured architecture of CNNs, combined with advanced performance enhancement techniques, has significantly improved their effectiveness in medical anomaly detection. Techniques like data augmentation, transfer learning, and attention mechanisms have addressed challenges such as limited data and model generalization.

Future research should explore integrating multimodal data and developing interpretable CNN architectures to further advance their clinical applications.

References :

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (pp. 1097-1105).

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

Litjens, G., Kooi, T., Bejnordi, B. E., et al. (2017). A survey on deep learning in medical image analysis. Medical Image Analysis, 42, 60-88.

- Tan and Q. Le, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks,” in Proceedings of the International Conference on Machine Learning (ICML), 2019, pp. 6105–6114.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention(pp.234-241)

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual

author(s) and contributor(s) and not of MJHI and/or the editor(s). MJHI and/or the editor(s) disclaim responsibility for any injury to

people or property resulting from any ideas, methods, instructions or products referred to in the content.